Anthropic’s Data Privacy Decision: Users of Claude AI Assistant Face Crucial Choice by September 28

AI company Anthropic is setting a deadline of September 28, 2025 for users of its Claude AI assistant. This deadline pertains to a crucial decision about their data privacy. Users are required to either opt out of or consent to having their chat and code transcripts used for training future AI models. This decision carries significant implications for data retention policies.

Users who give their consent will have their data retained for five years, a substantial increase from the current shorter periods. On the other hand, users who opt out will have their conversations excluded from training datasets. This decision impacts consumer tiers including Claude Free, Pro, and Max. However, enterprise accounts remain unaffected by this policy change.

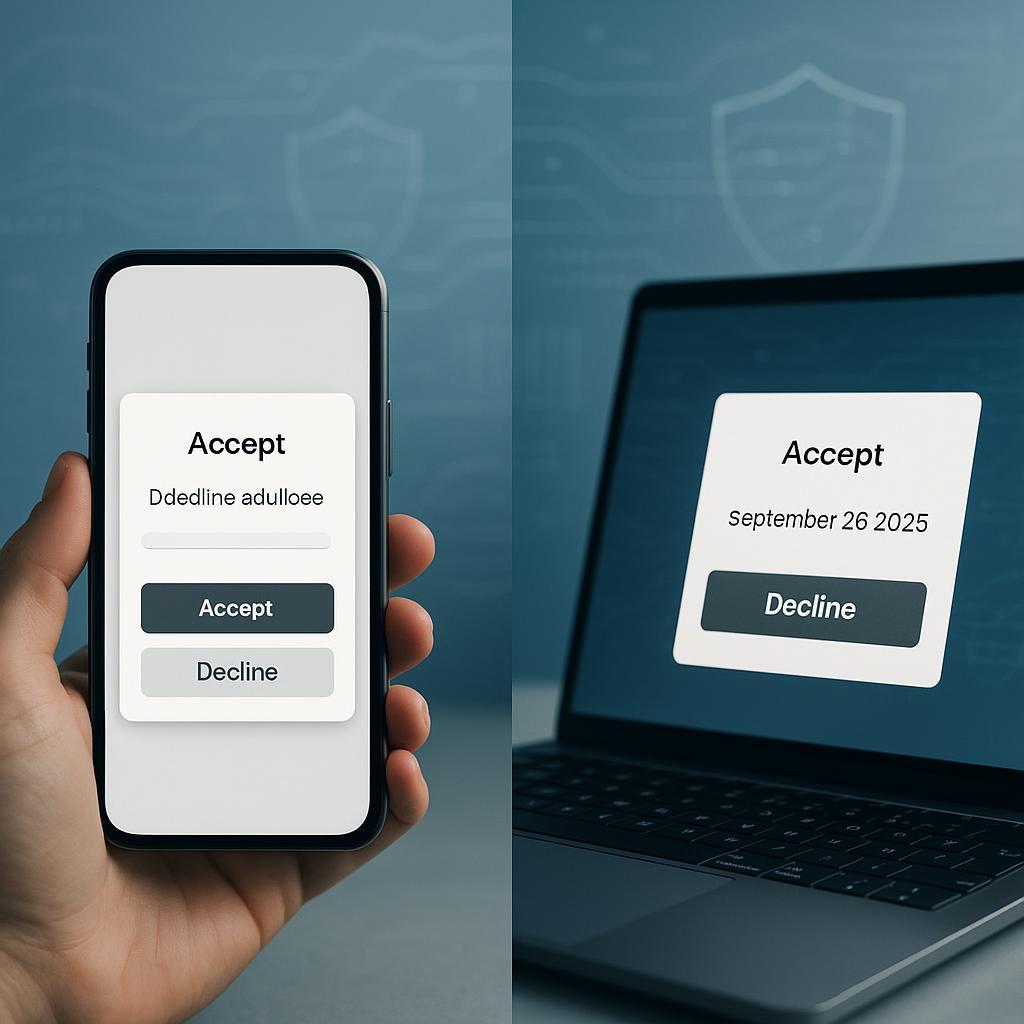

A pop-up prompt will appear upon login, requiring users to make their choice. It’s important to note that settings can be adjusted even after the initial decision. However, Anthropic warns that any data already used for training purposes cannot be retroactively removed from existing models.

The company has assured users that it does not sell user data. It also employs automated filters to protect sensitive information during the training process. This move is a reflection of the growing industry pressure to provide greater transparency around AI training practices and user consent.

The deadline comes at a time when the AI industry is under increased scrutiny over data usage practices. Regulators worldwide are examining how AI companies collect and utilize user-generated content for model improvements. Anthropic’s approach represents a more transparent method compared to some competitors who incorporate user data without explicit consent.